A new online tool uses cause-and-effect relationships to explore and better-understand responsible AI development and use. From the Future of Being Human Substack.

Several weeks ago I was asked to produce a learning module on the ethical and responsible development and use of AI. It was to be part of a new undergraduate course on AI literacy. But I had a problem: everything about AI is moving so fast that any set-in-stone course material would almost definitely be out of date before the first time it was taught.

Plus, let’s be honest, who wants a dry lecture from a “talking head” about AI ethics and responsibility — important as they are?

And so I started playing with different ways to help students think about the challenges and opportunities of AI — including fostering a responsible use mindset rather than simply focusing on facts and figures.

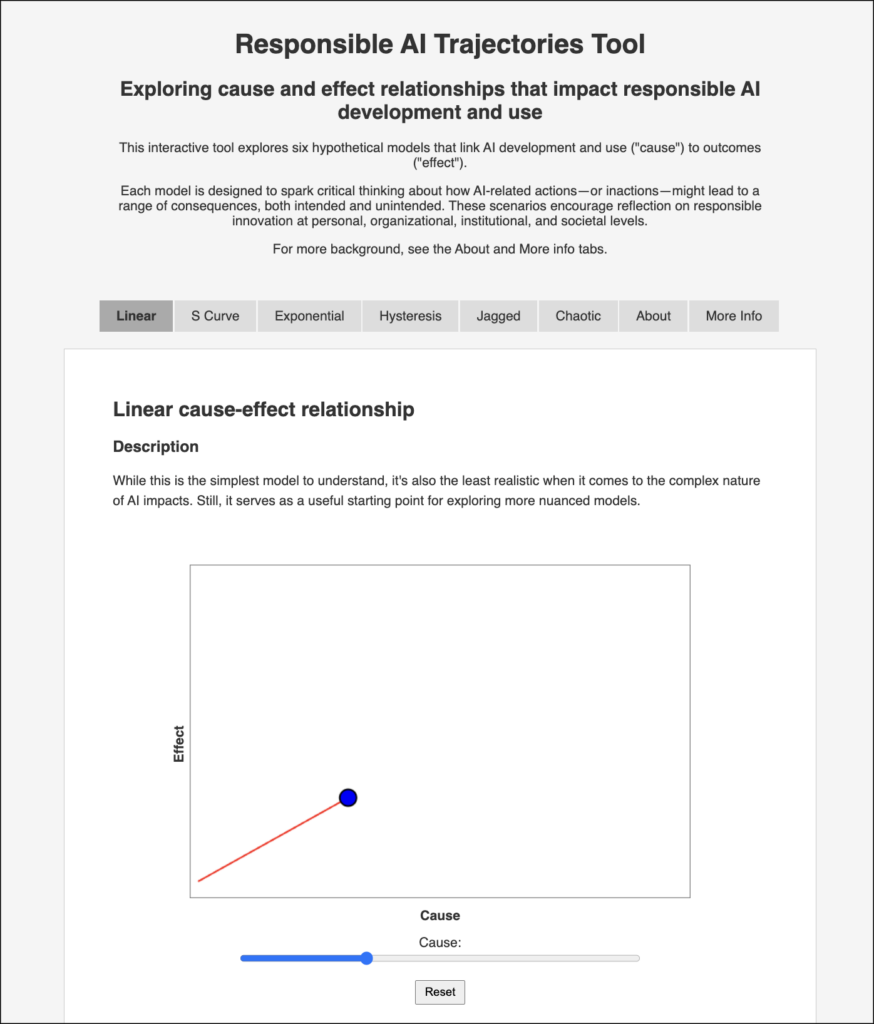

The result was a very simple online tool that allows users to explore AI development and use through the lens of cause and effect — available at raitool.org:

The Responsible AI Trajectories Tool presents users with six interactive cause-effect relationships, and encourages them to think about which might apply to AI use cases they are faced with — and how this might guide their decisions and actions. It also comes with illustrative examples, and a bunch of additional resources.

Of course, thinking about responsible and ethical AI though the lens of cause and effect is fraught with problems. On the face of it it feels too cut and dried — a utilitarian approach to solving problems that ignores the messiness of how people, society and technologies intersect and intertwine.

And yet, as you’ll see if you play with the tool, there are nuances here that allow for this messiness to be recognized and approached with some nuance.

The best way to explore the tool is to play with it. But I’ve also included an edited version of the script used in the course module below, which goes into the background behind the tool and its use in more depth …

Related:

More on artificial intelligence at ASU.