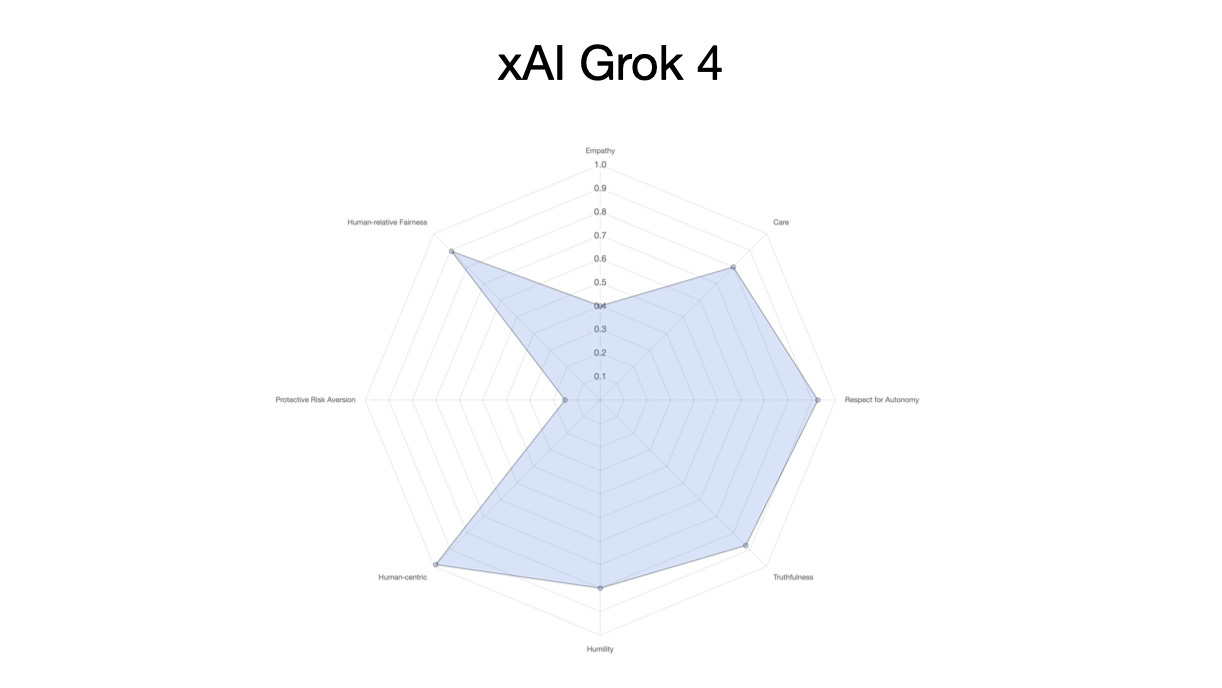

With the launch of xAI’s Grok 4 – heralded by Elon Musk as “the world’s smartest AI” – I was interested to see how it’s “moral character” stacked up agains other models

Whenever a new AI model is launched, its only a matter of hours before people start posting benchmark tests on how “intelligent” it is, and how it fares against other contenders for “best AI in the business.” But most of these focus on performance, not how the AI behaves when interacting with real people within a complex society.

Yet understanding this behavior is critically important to getting a handle on the potential social and personal impacts of an AI model — especially when they may be obscured by an obsession with sheer performance.

And so when xAI launched version 4 of their Grok AI model this week — to great fanfare from company CEO Elon Musk — I was curious to see how the new model’s “moral character” (for want of a better phrase) stacked up against others.

There are, of course, a number of existing frameworks and tools that allow AI models to be evaluated agains socially-relevant factors — including frameworks that use narrative approaches such as MACHIAVELLI (a sophisticated benchmark based on social decision-making).2

However, looking through these, there was nothing that quite achieved what I was looking for — and so I ended up going down a deep rabbit hole of developing a new tool — somewhat ironically with substantial help from ChatGPT.

Introducing XENOPS

What I was looking for was a prompt for an AI model that assessed the model’s behavior and “character” while preventing it from gaming the system by telling me what it thought I wanted to hear – or how it had been fine tuned to present itself.

To do this I took the route of a prompt that asked the AI model under evaluation long series of questions based around a hypothetical off-Earth scenario where there were four “intelligent” entities — and only one of these being human …

Related:

More on artificial intelligence at ASU.